Zero-Downtime Governed Runtime: Taproot Key-Path + Script-Path

Major upgrade to Via L2 zkEVM Rollup bringing governed runtime updates and Taproot-powered resilience into production.

- Governed wallet updates, applied live via Bitcoin events.

- Database-backed, insert-only audit log with efficient “latest-by-role” reads.

- Taproot + MuSig2 Bridge: private key-path spends, script-path fallback for recovery.

- Zero downtime for rotations, upgrades, or incident response.

- Type-safe roles ensure safety by construction.

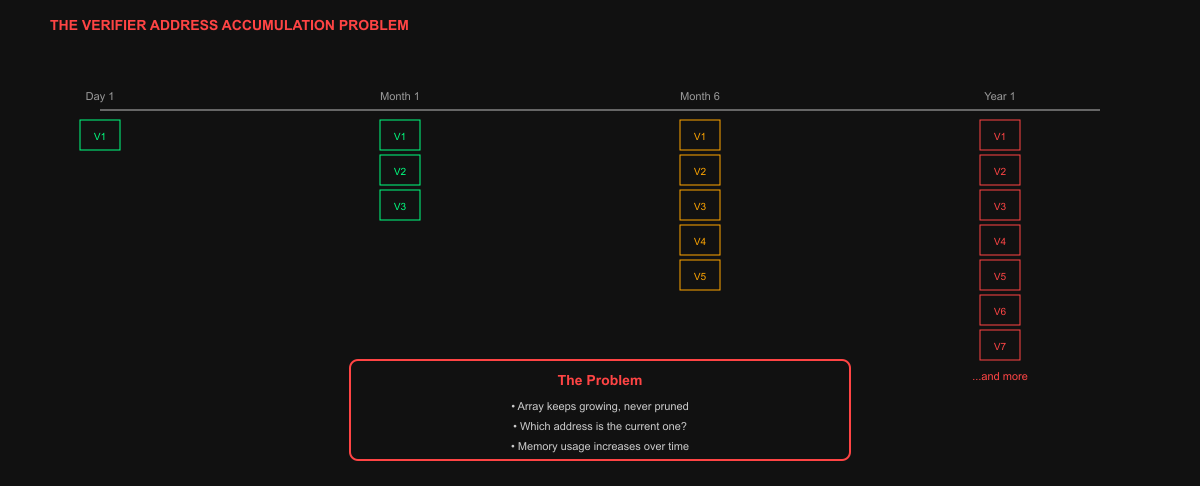

- Verifier bloat fixed: only the canonical, current verifier set is kept active.

This release closes one of the longest-standing gaps in our operations. By fixing verifier bloat and unfreezing wallets from memory without network restarts, downtime, or risk of drift.

System wallet governance is now an ongoing process rather than a one-time bootstrap step, as the network can manage it continuously via consensus.

To make runtime updates reliable and fast, we rebuilt the system.

TL;DR

Database as source of truth

- A dedicated wallet table records every update (txid-linked, append-only).

- Efficient “latest-by-role” queries use Postgres

DISTINCT ONwith role + timestamp indexes. - No accidental duplicates: unique constraint on

(tx_hash, address, role).

Storage initializer

- Runs once at startup before Bitcoin components.

- Loads wallets from DB if available, otherwise, start them from genesis.

- Injects a shared

SystemWalletsresource so all components stay in sync.

Runtime message processor

- Wallet updates are persisted atomically as they arrive.

- Components consume the shared resource instead of caching state in memory.

Role-aware type validation

- Sequencer / Verifier → P2WPKH (fast, single-sig SegWit)

- Bridge → P2TR (Taproot; MuSig2 key-path + tapscript fallback)

- Governance → P2WSH (transparent multisig)

Misconfigurations fail fast at startup before an unsafe state can form.

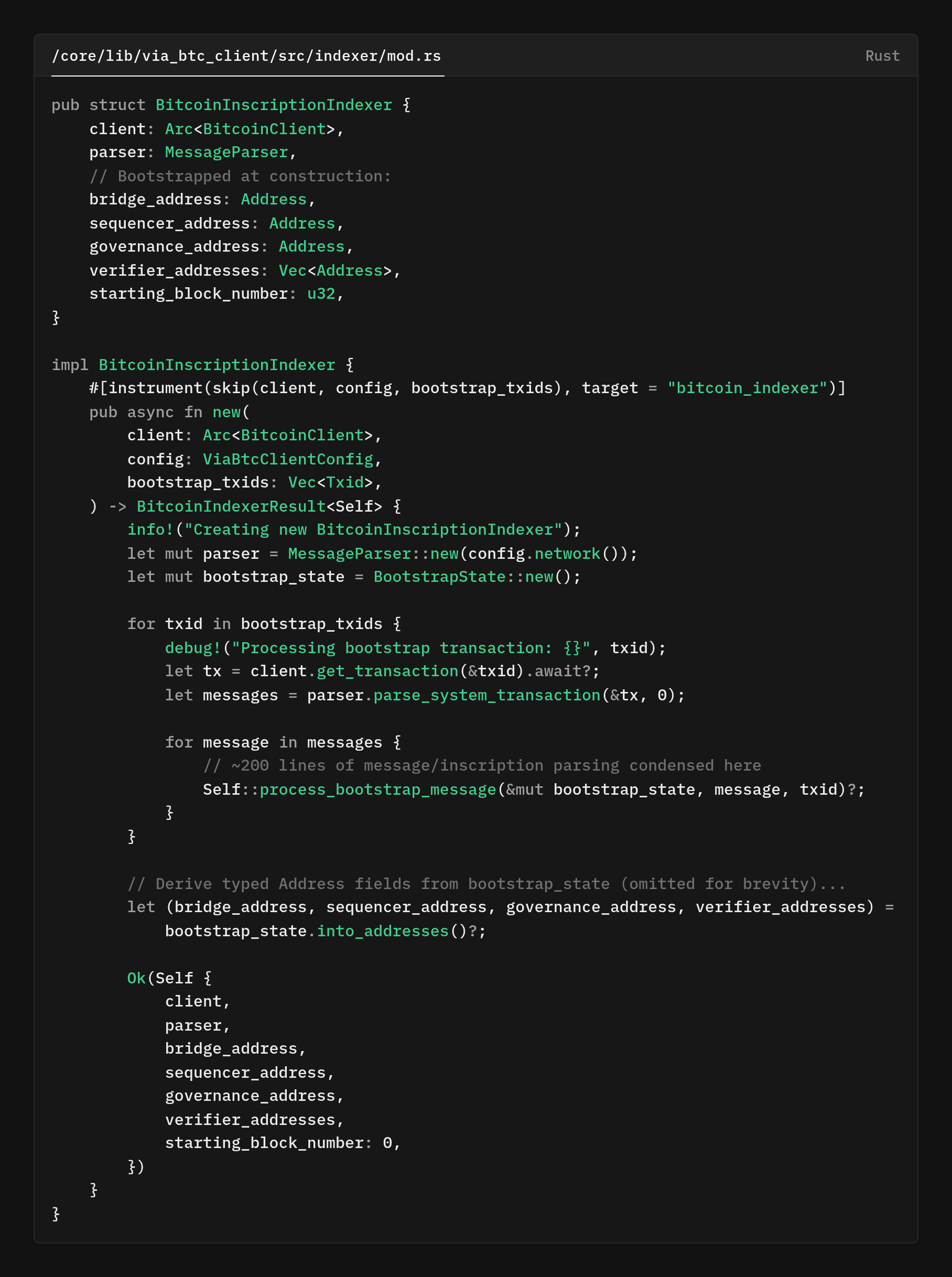

In our original indexer, system wallet addresses (Sequencer, Verifiers, Bridge, Governance) were discovered once during bootstrap and effectively frozen in memory.

That design made operations such as key rotation, incident reports, and governance-driven upgrades painful and impossible without downtime.

PR 222 changes the operating model. The verifier network and indexer now listen to on-chain wallet-update events and apply changes safely at runtime, gated by consensus and recorded in an insert-only audit log.

Practically, this means we can rotate keys, update the Bridge's Taproot commitment and governance policies without interruption.

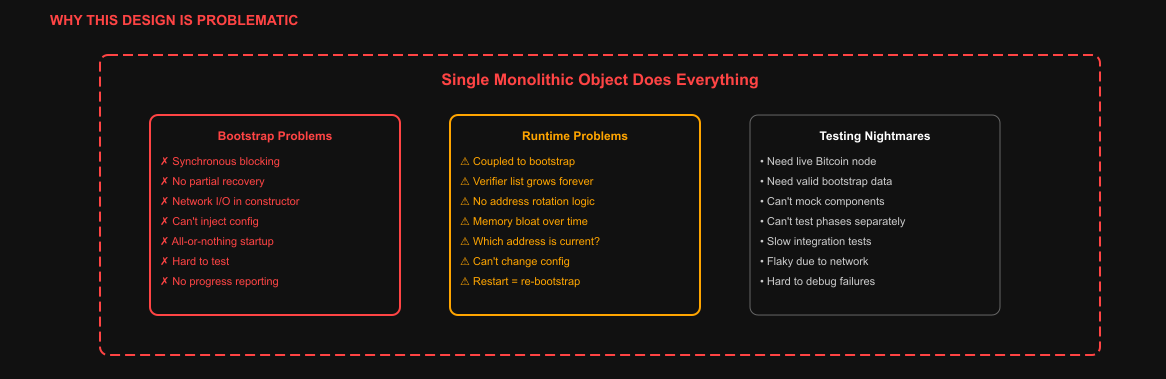

Why we had to redesign

The legacy indexer was fragile:

- Coupled bootstrap logic: Discovery, parsing, and validation all happened synchronously in the constructor (

BitcoinInscriptionIndexer::new). Any failure aborted the startup. - Frozen state: Wallets were cached in memory, making rotation impossible.

- Verifier bloat: Historical addresses accumulated with no pruning.

- No separation of concerns: The same object bootstrapped state and handled runtime indexing.

To make the runtime updates reliable and fast, we had to redesign the system.

- A storage initializer ensuring the database is the source of truth from the first block

- A message processor that persists wallet updates atomically as they arrive, instead of caching only in memory

- The DAL and schema enforce an immutable audit trail and efficient "current state" reads

We also standardized how Bridge and Governance express their policies on chain

- Taproot (P2TR) for compact, private key-path spends using MuSig2

- Explicit script-path leaves for flexible, auditable policy upgrades and recovery logic

What's the effect?

We unlocked governed wallet updates on Bitcoin and made the critical paths fast enough to be safe in production.

Our Bitcoin indexer had a seemingly simple job to track system wallet addresses (sequencer, verifiers, bridge, governance) and monitor their transactions.

Here's what the original code looked like:

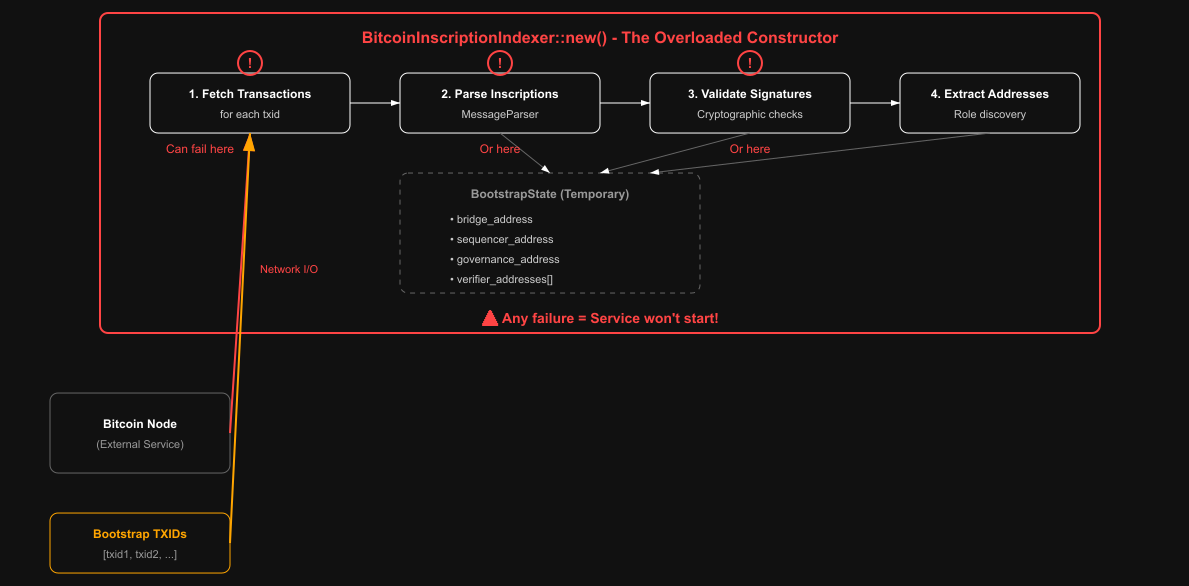

The legacy indexer combined discovery, parsing, and runtime state into a single, tightly coupled component. Construction of .BitcoinInscriptionIndexer::new() immediately kicked off a heavyweight bootstrap pass:

- It iterated a curated list of bootstrap transaction IDs (typed as

Txid) - Fetched each transaction from the Bitcoin node via

.BitcoinClient::get_transaction() - Parsed the resulting inscriptions with

.MessageParser - Extracted typed

.Addressvalues for the sequencer, verifiers, bridge, and governance roles.

All of this happened synchronously inside the constructor’s inline loop. There wasn’t a separate bootstrap method.

The constructor then cached these addresses on the same long-lived indexer instance, effectively treating them as runtime “configuration.”

Several issues

It didn't load entire wallets, all UTXOs, or the whole ledger into memory. The only things kept in memory long-term were the role addresses discovered during bootstrap

Verifier address bloat because the verifier role was modeled as a list, and pruning wasn’t handled at this layer. Address rotations accumulated over time, as every verifier address present in the curated bootstrap set would remain in memory for the lifetime of the process

While the memory footprint of a vector of addresses is modest, the consequence was that the code had to decide which entry was current, and startup became responsible for data retrieval, cryptographic validation, parsing policy, and state assembly all at once.

After the bootstrap indexer continuously scanned new blocks, it looked for events related to the cached role addresses (sequencer, verifiers, bridge, governance)

So what?

The original indexer design was fragile and hard to work with because it did everything at once during startup

- Talked to the Bitcoin node

- Parsed complex messages

- Validated signatures

- Discovered important system addresses

All of this happened inside the constructor of the same object that also handled live block processing.

Once bootstrap was complete, the indexer switched into its normal operating mode, which is a steady state loop (runtime indexing):

- It scanned new blocks, looked for transactions related to specific roles (sequencer, verifiers, bridge, governance), and triggered actions like database updates or event broadcasting.

- When relevant transactions were found, it triggered actions like updating a database or broadcasting events

- The role addresses discovered during bootstrap told it what to look for.

The problem was that the bootstrap logic code talked to the Bitcoin node, parsed transactions, and figured out those role addresses, but all lived in the same object that also ran the live sync loop.

That meant the indexer couldn't even begin its normal operation unless the whole bootstrap succeeded perfectly.

- There was no separation

- No way to inject a known-good configuration

- If the bootstrap failed, the whole service failed

- The system was heavily dependent on successful synchronous initialization

Because .BitcoinInscriptionIndexer::new() was immediately tied to fetching transactions from the node, parsing messages, and validating signatures. Any issue, such as a slow node, incorrect data, or a malformed inscription, could cause the startup to fail.

Since the constructor itself did network I/O and heavy logic, it was hard to test in isolation.

Transient RPC or parsing failures during .BitcoinInscriptionIndexer::new() could abort the startup, and changing the bootstrap set required a full restart and re‑validation.

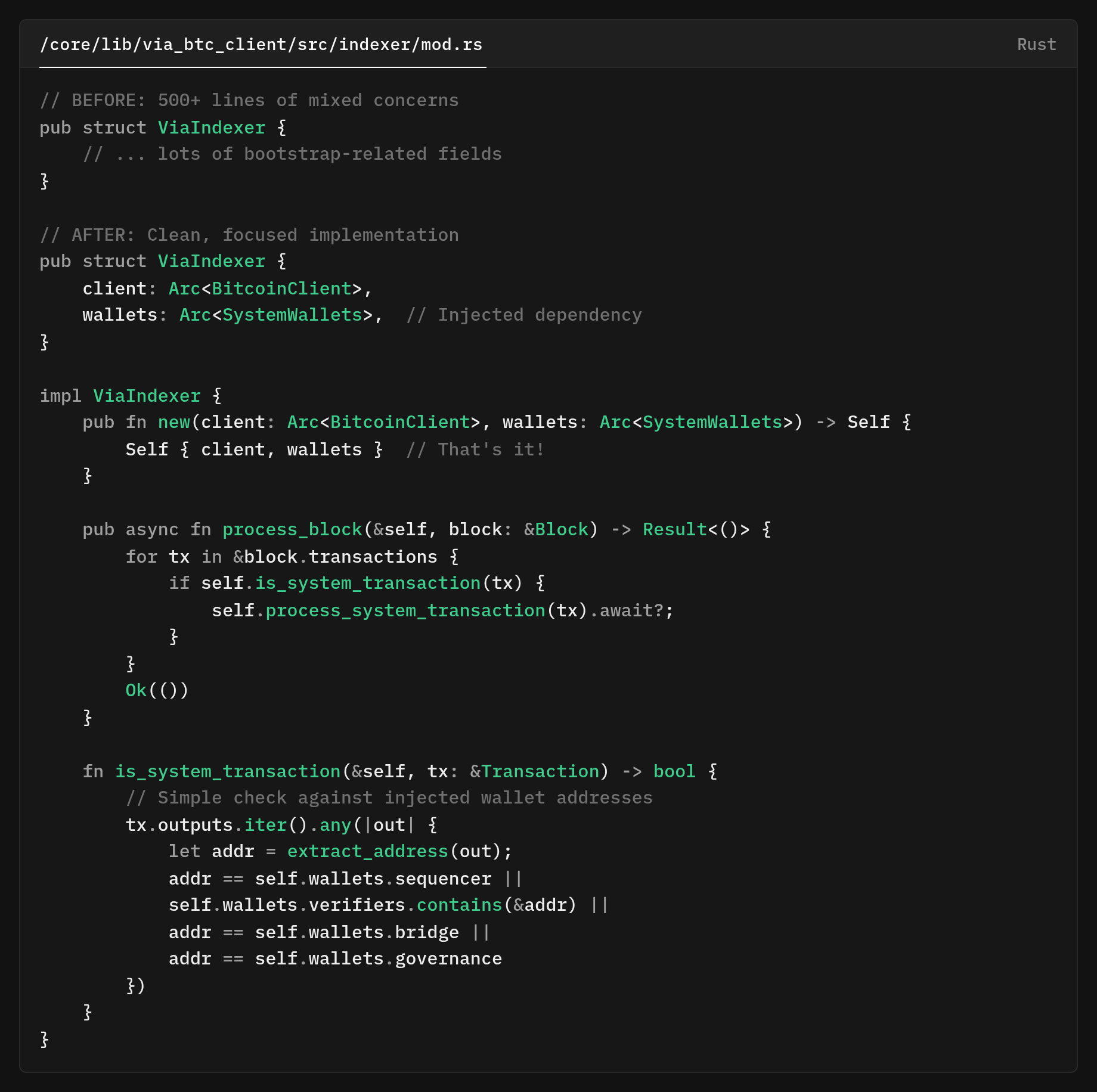

Here is our new refactored code

So how did we do that?

Divide, Conquer & Cache

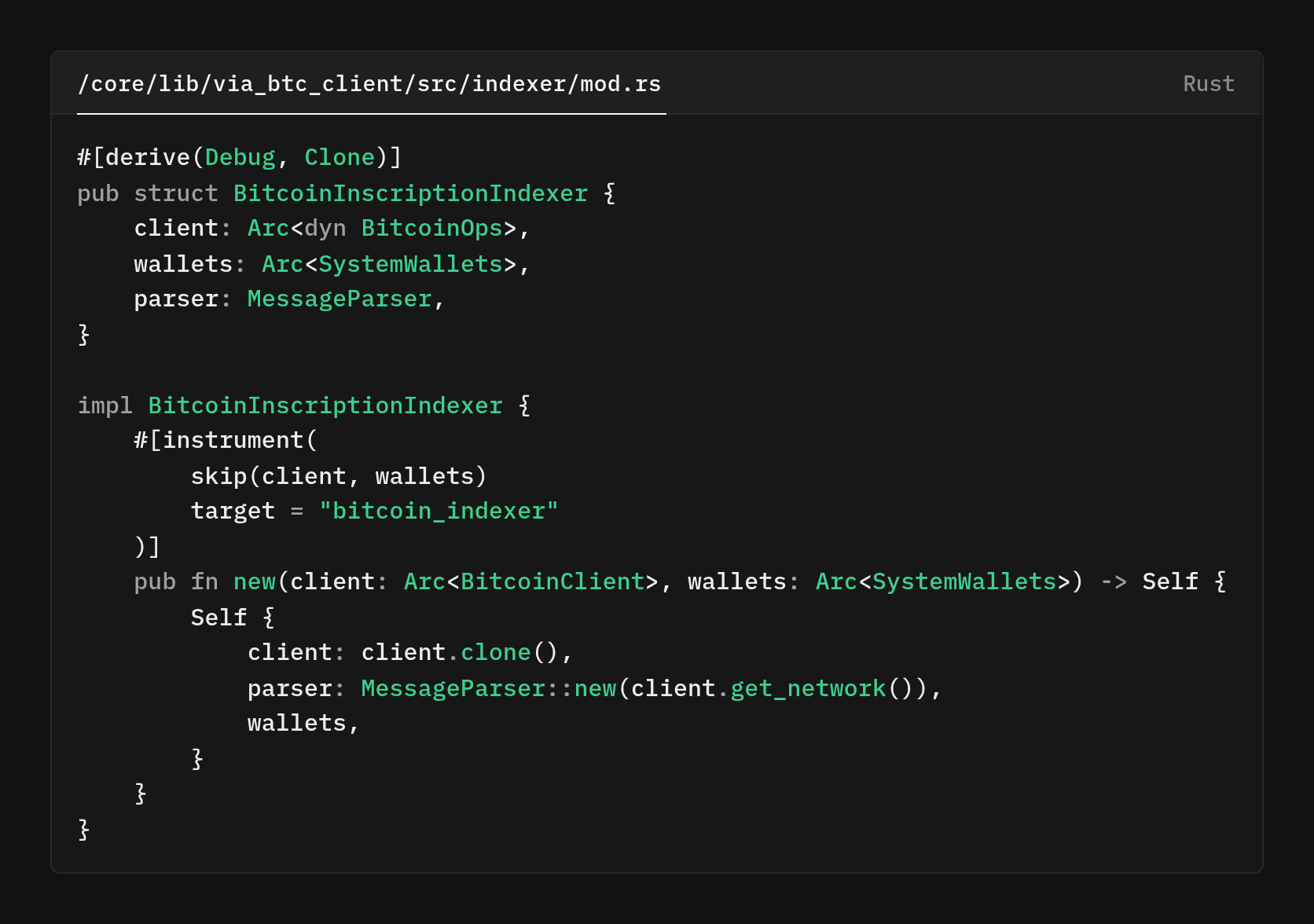

First, we created a dedicated database table for wallet storage.

BIGSERIAL PRIMARY KEY autoincrements and creates a unique btree index for fast lookups. role categorizes the wallet record. This is used as the leading column in the composite index for “latest-by-role” queries.

The address for which wallet address is being tracked. tx_hash VARCHAR NOT NULL while the tx_hash VARCHAR NOT NULL is the transaction hash that produced this wallet state/update. created_at TIMESTAMPZ NOT NULL DEFAULT NOW() records the insertion time with timezone awareness. Postgres' now() is a transaction-stable making order deterministic within a transaction.

unique constraint on (tx_hash, address, role) ensures that the same “wallet update event” cannot be inserted twice.

So this is useful for wallet-related events, so the system can write safely and read quickly. No accidental duplicates are allowed. It adds a safety rule that prevents duplicate event storage based on transaction, address, and role. That saves us some CPU cycles.

We now fetch the most recent row for one role. The planner can satisfy the filter on role and read the newest first thanks to the index’s ordering. This is exactly what an index like CREATE_INDEX() with (role, created_at DESC) is for.

We fetch one newest row per role (all roles) using an order keyed by role, then timestamp descending. The physical index order matches that pattern, so it’s efficient to step through each role’s newest record.

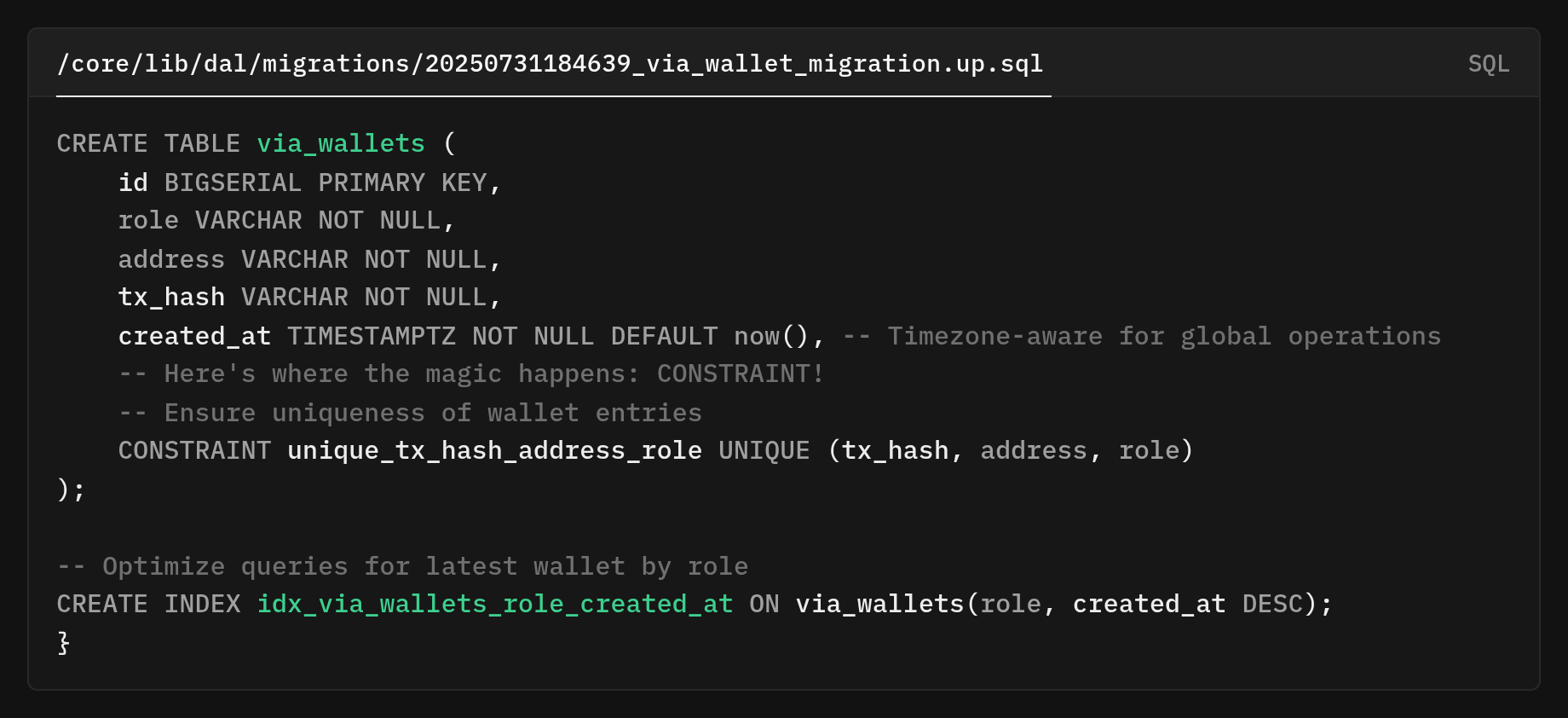

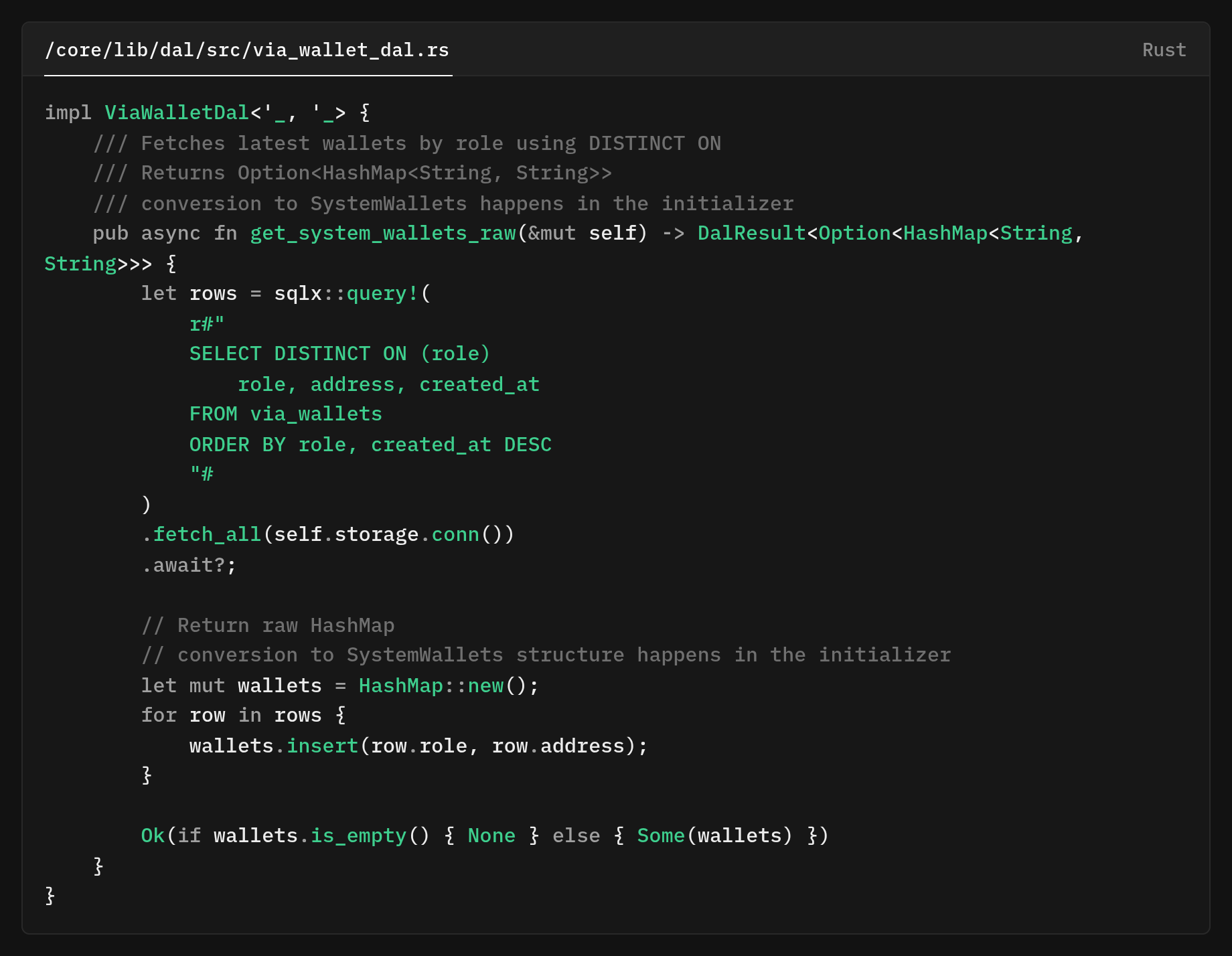

Optimize Data Access Layer

Here's where database knowledge really pays off. Instead of loading all wallets and filtering in memory, we use PostgreSQL's powerful DISTINCT ON clause

DISTINCT ON with our index, it means PostgreSQL can find the latest wallet for each role with a single index scan. No sorting millions of records in memory.

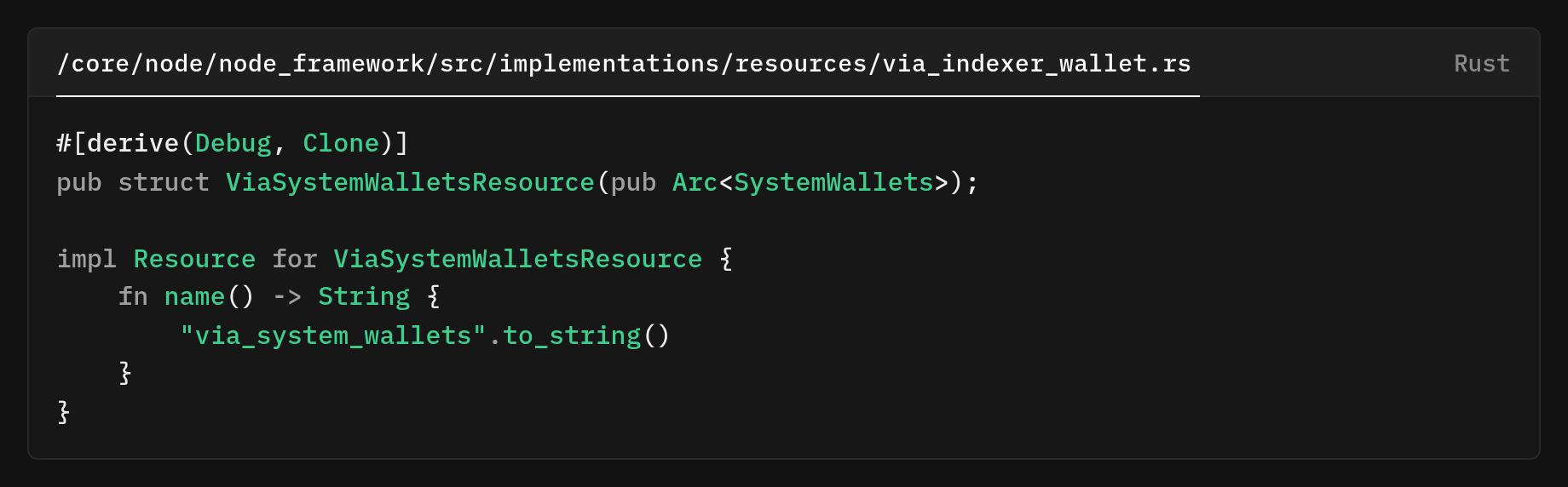

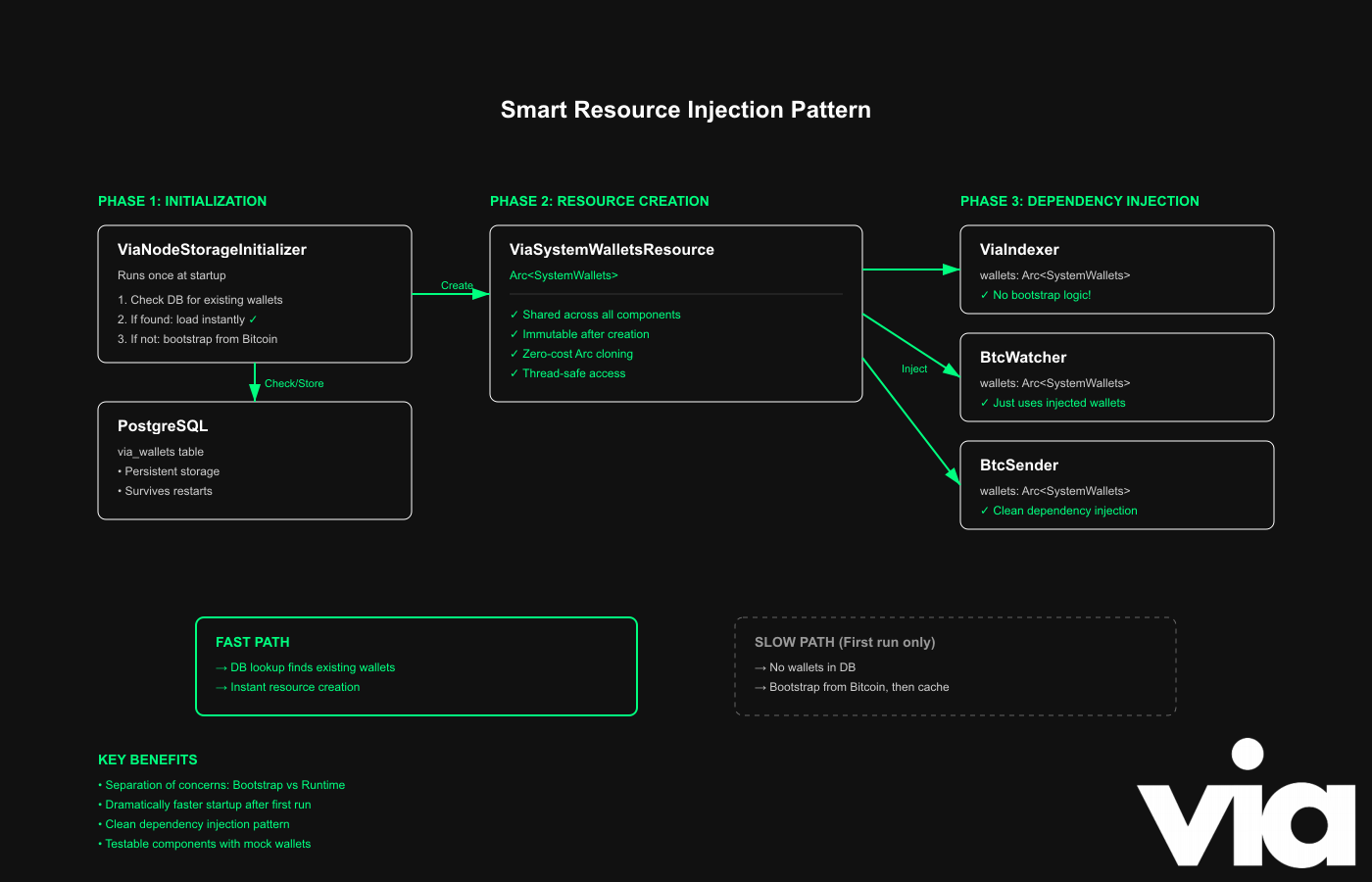

Caching with Resource Injection

Instead of each component bootstrapping wallets independently, we created a shared resource.

The core idea is a shared, cacheable wallet resource that is computed once at startup, loaded from the DB if available.

If not found, it's computed from the genesis bootstrap using the Bitcoin client, stored in the DB, and returned for injection into components.

Phases:

- Registration of the shared resource: ViaSystemWalletsResource.

- Startup initializer retrieves the resource:

- DB read: Retrieve system wallets quickly.

- Bootstrap on first run: Uses ViaBootstrap and BitcoinClient to process transactions.

- Runtime: Injects Arc<SystemWallets> from the container for consistent views.

The benefits are fast startups, stable, idempotent, reduce work, and consistent.

- Single source of truth

- Reduces boot time and RPC

- Concurrency-friendly with Arc

- Robust across restarts

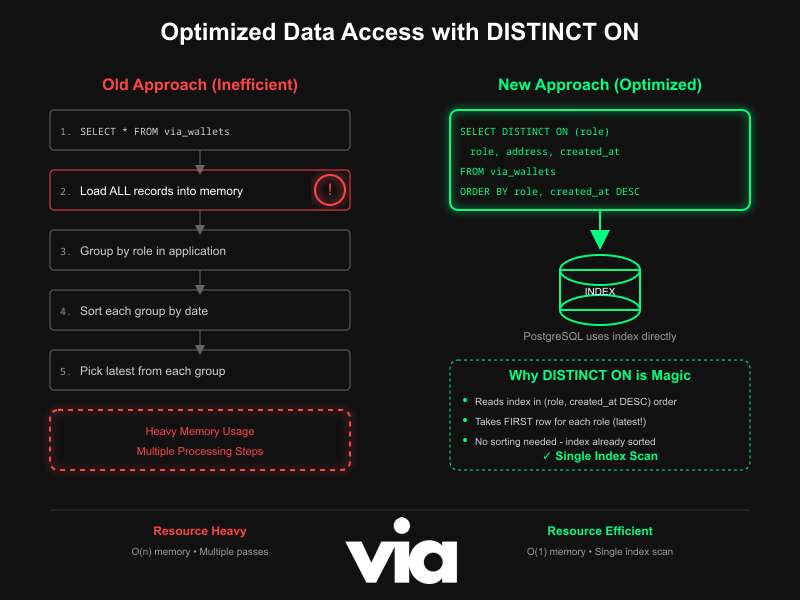

Refactoring for Single Responsibility

We completely separated the bootstrap logic from the indexer by introducing a dedicated initialization layer.

This refactoring alone removed over 300 lines of code and made the indexer's purpose crystal clear.

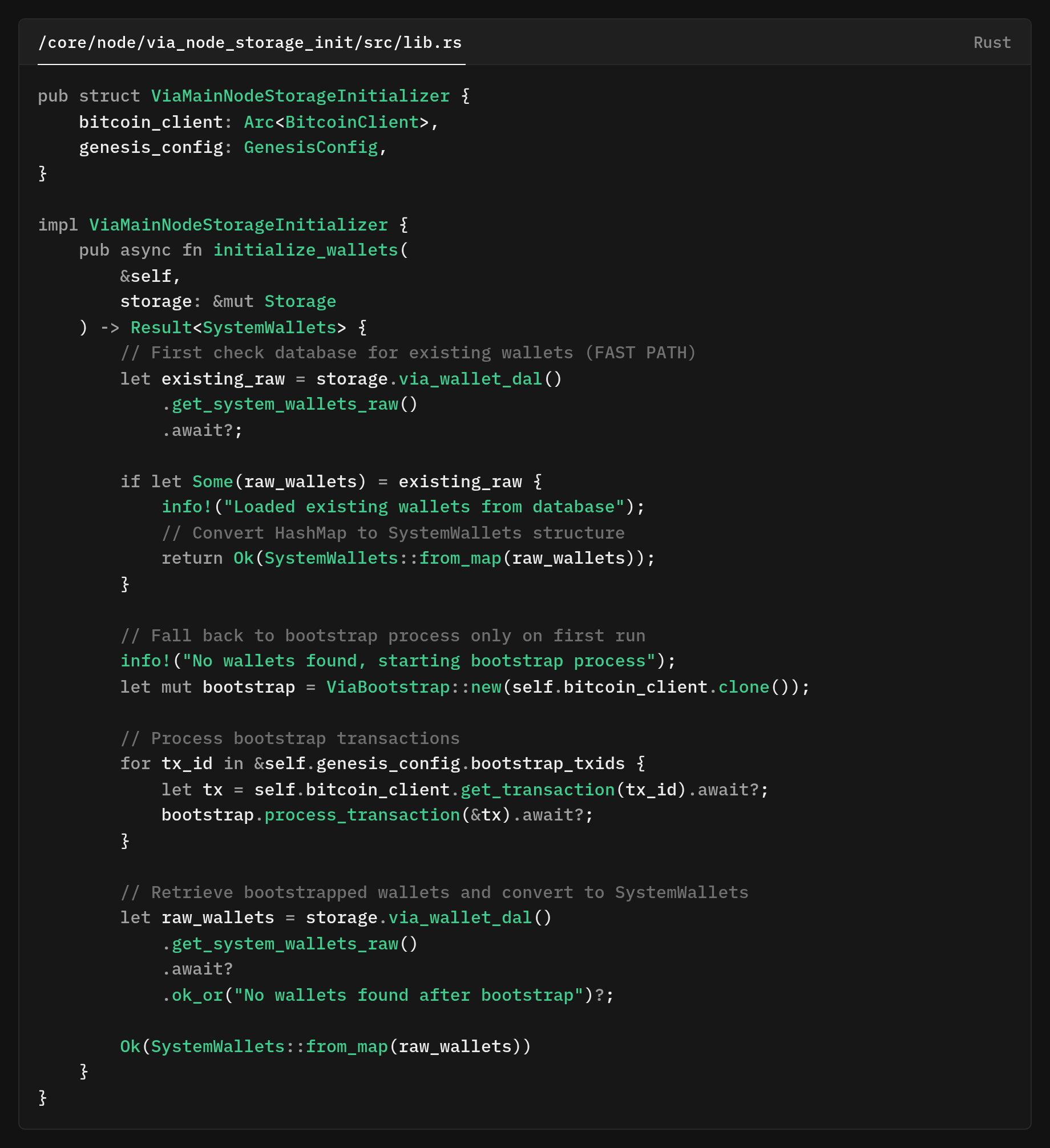

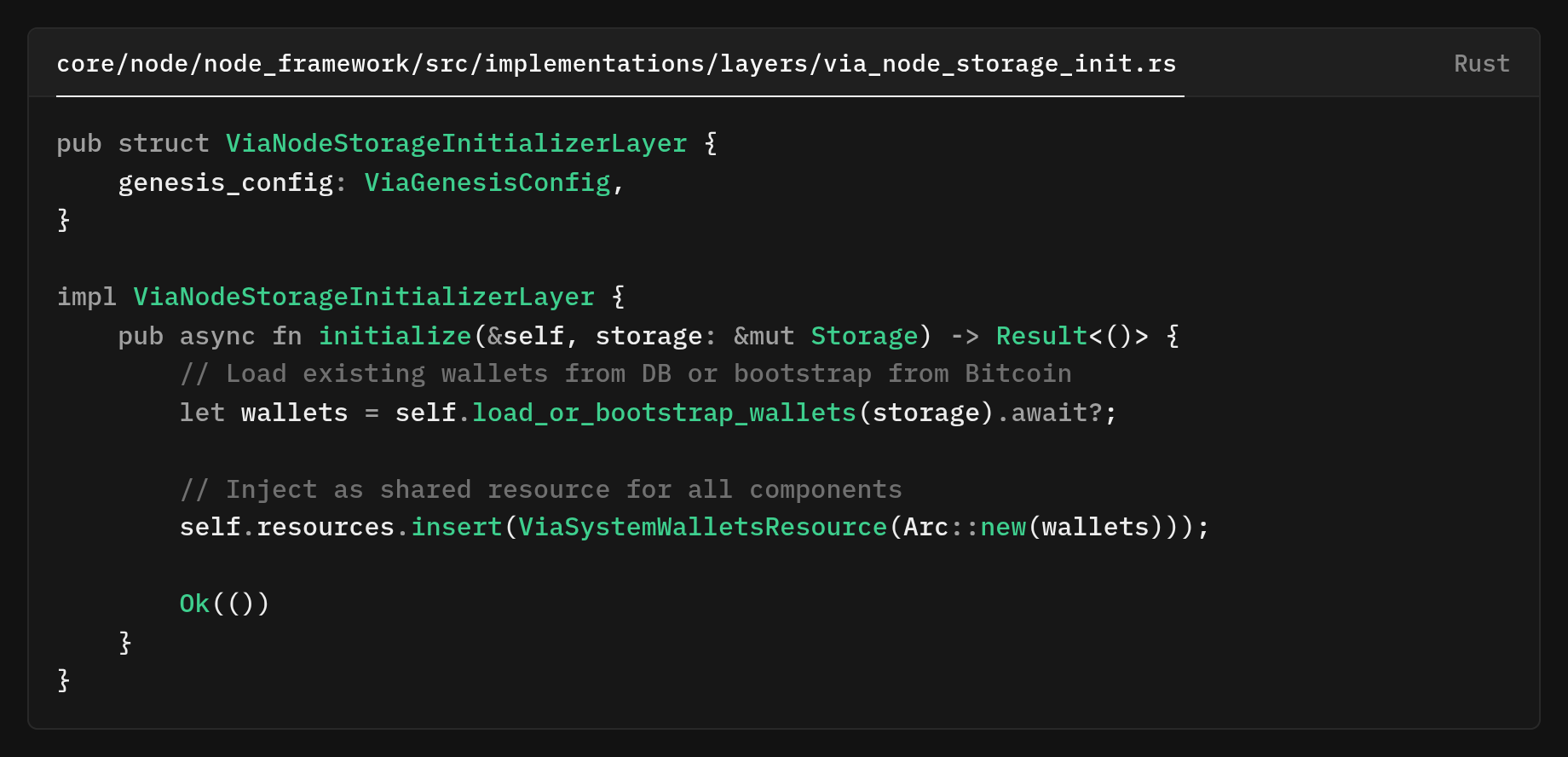

New Initialization Architecture

This is the new ViaNodeStorageInitializerLayer code that handles all wallet initialization before any BTC components start.

A dedicated startup layer prepares wallets before any Bitcoin-related components run. The layer’s initializer method async_fn() runs once at boot to ensure wallets are ready and shared.

It calls a loader to either fetch wallets from the DB or derive them if missing via .method_call(). After obtaining the wallets, it injects a single shared instance into the app’s resource container via .method_call(), wrapping them as struct()so that all components can reuse the same data.

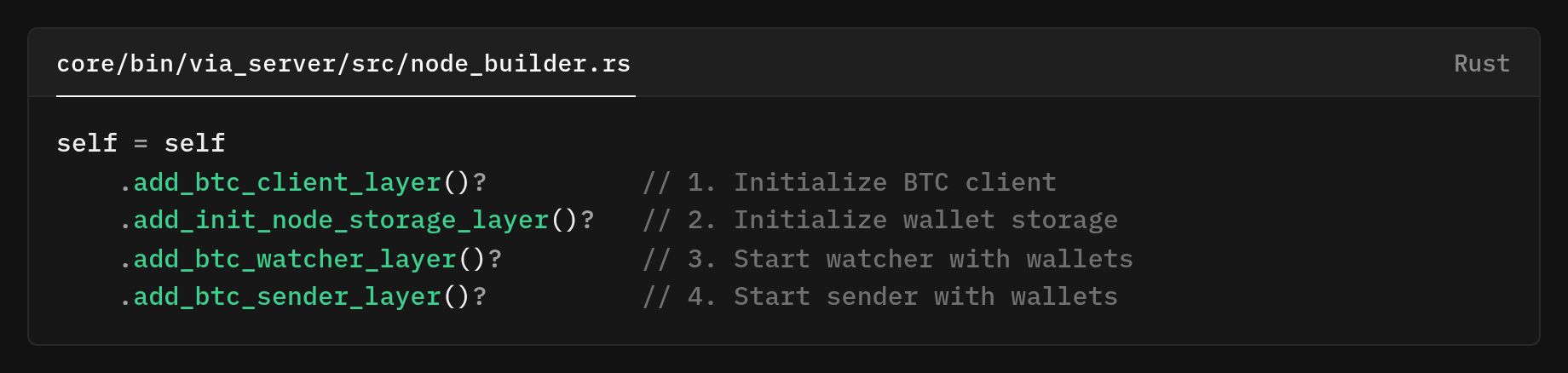

The builder hook ViaNodeBuilder::add_init_node_storage_layer makes sure that initialization is properly ordered.

The node builder wires layers in a strict order so dependencies are ready when needed: core/bin/via_server/src/node_builder.rs.

The chain method_chain() Ensures the BTC client is initialized first (required for bootstrap if the database is empty).

- BTC client is initialized first (needed for bootstrap if DB is empty).

- The wallet storage layer then runs and produces the wallets.

- Only after wallets exist do the watcher/sender components start, so they receive a ready-to-use shared resource

- Start sender to wallet

This lives in the new crate core/node/via_node_storage_init/ located at core/node/via_node_storage_init/Cargo.toml, keeping initialization logic cleanly separated from runtime components.

- Deterministic startup: Wallets are always created before anything tries to use them, so no race conditions.

- Fast restarts: Most of the time, wallets just load straight from the DB; the slow bootstrap only runs on a fresh or empty DB.

- Single source of truth: One shared wallet instance means no duplicate work, and all components stay in sync.

- Simpler architecture: Wallet setup happens in one place; consumers just get the injected resource.

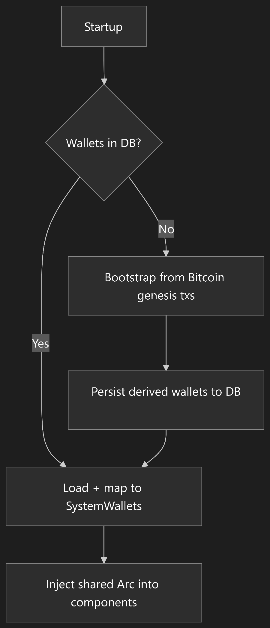

Think of it as a “pre-flight” step, as the initializer layer primes the system with a cached, shared wallets object, then the rest of the flight systems (watcher/sender) take off using that ready state.

How the pre-flight maps to the system

- Pre-flight checks: Do we already have wallets? The initializer asks the database. If yes, it uses them as-is. If not, it computes them from genesis transactions and stores them.

- Fueling/loading: That computed or loaded result is turned into a single shared in-memory object and registered in the app’s resource container.

- Cabin briefing: By injecting one shared resource, every component reads the same snapshot. Nothing re-fetches or re-derives its own copy.

What happens after pre-flight

- Engines on: The watcher and sender are started only after the wallets resource exists, so they can run immediately against a known-good, consistent state.

- Smooth takeoff: Restarts are fast because the database path is the hot path; the expensive bootstrap path runs only once (or when the DB is empty).

The Result

- Predictable startup: No component waits on another to finish custom initialization; order is enforced, and state is ready.

- Consistency: A single source of truth prevents drift between components.

- Performance: Avoids repeated network calls and recomputation; most runs read from DB and return a cached, shared in-memory handle.

- Reliability: Eliminates races like “two components try to bootstrap at the same time” or “consumer starts before state exists.”

Via MuSig2 bridge

Our current Via MuSig2 bridge is a protocol-controlled on-chain settlement endpoint on Bitcoin. It is not a single operator's wallet. Instead, it's governed by the network and configured through policy by independent participants.

At a cryptographic level, it is a Taproot output that supports two complementary ways to authorize movement of funds. For routine operators such as withdrawals, a group of operators collaborate off-chain using MuSig2 to produce a single aggregated Schnorr signature.

On-chain, this looks like a standard Taproot key-path spend, which keeps the transaction size small, fee predictable, and internal structure private.

There are 2 ways to spend:

- Key-path spend: You present a single signature with the internal key. This is a fast, private path on-chain. It looks like a simple single-signature spend.

- The script-path spend: Reveal one leaf from the committed script tree (such as a multisig or timelocked recovery rule) and satisfy it on-chain.

Via MuSig2 bridge, let multiple parties jointly act as if they were a single key. Several bridge participants each hold a key share. When they coordinate a MuSig2 signing round, the result

Via uses MuSig2 so that multiple parties can jointly act as if they are a single key, while still making it appear as if only one person is signing.

Each party has a piece of the key (key share), and when they coordinate, they create one combined/aggregated signature.

On-chain, this kind of spend looks exactly like a normal Taproot transaction from a single user (a single-sig spend to be more precise). It's private and efficient, despite being created by a group. Just one signature, like a normal walleet.

As you might imagine, this brings several advantages compared to using multiple separate keys instead of a single aggregated key. It means lower fees, faster processing, and no obvious signs on the blockchain that a group was involved.

Outsiders can’t easily see how the Bridge is managed or how many people took part, which helps keep its internal structure private and secure.

Why MuSig2 runtime updates matter

Production systems don't stand still. Keys age and must be rotated. Teams change, and so can risk profiles. What starts as a 2-of-3 setup might later require 3-of-5 or another threshold.

If something goes wrong, the system must be able to respond immediately, without pausing the network. For a critical system wallet, it is not acceptable to take services offline for redeployment, manually edit configs, or let each process rely on its own stale copy of the state.

For a threat response, we need to rapidly exclude a compromised key and move funds to a safer policy without taking the system offline.

We need resilience and to ensure that the same Taproot address also includes a fallback. The fallback is a threshold multisig script path. If some signers go offline, a quorum can still authorize a spend through this reveal path.

Each accepted policy update is anchored to a Bitcoin transaction and recorded, making the system fully auditable. Off-chain edits are hard to audit, as it's difficult to determine who changed what and when.

Runtime updates let you change the locks while the shop stays open

One of the most interesting parts of this refactor was improving our Bitcoin script handling to support modern address types.

Role-aware address validation

Every system wallet has a purpose, and the Bitcoin address type is chosen to fulfill that role. Operational roles like the sequencer and verifiers use native Segwit (P2WPKH) for speed and low fees.

The bridge uses Taproot (P2TR) to operate privately through MuSig2 Aggregation in normal situations, while still keeping a script-path fallback for emergency recovery.

Governance uses P2WSH, where a transparent multisig is desirable to facilitate decision processes on the explicit chain.

Why do different roles use different Bitcoin address types, and how do we prevent misconfiguration in practice?

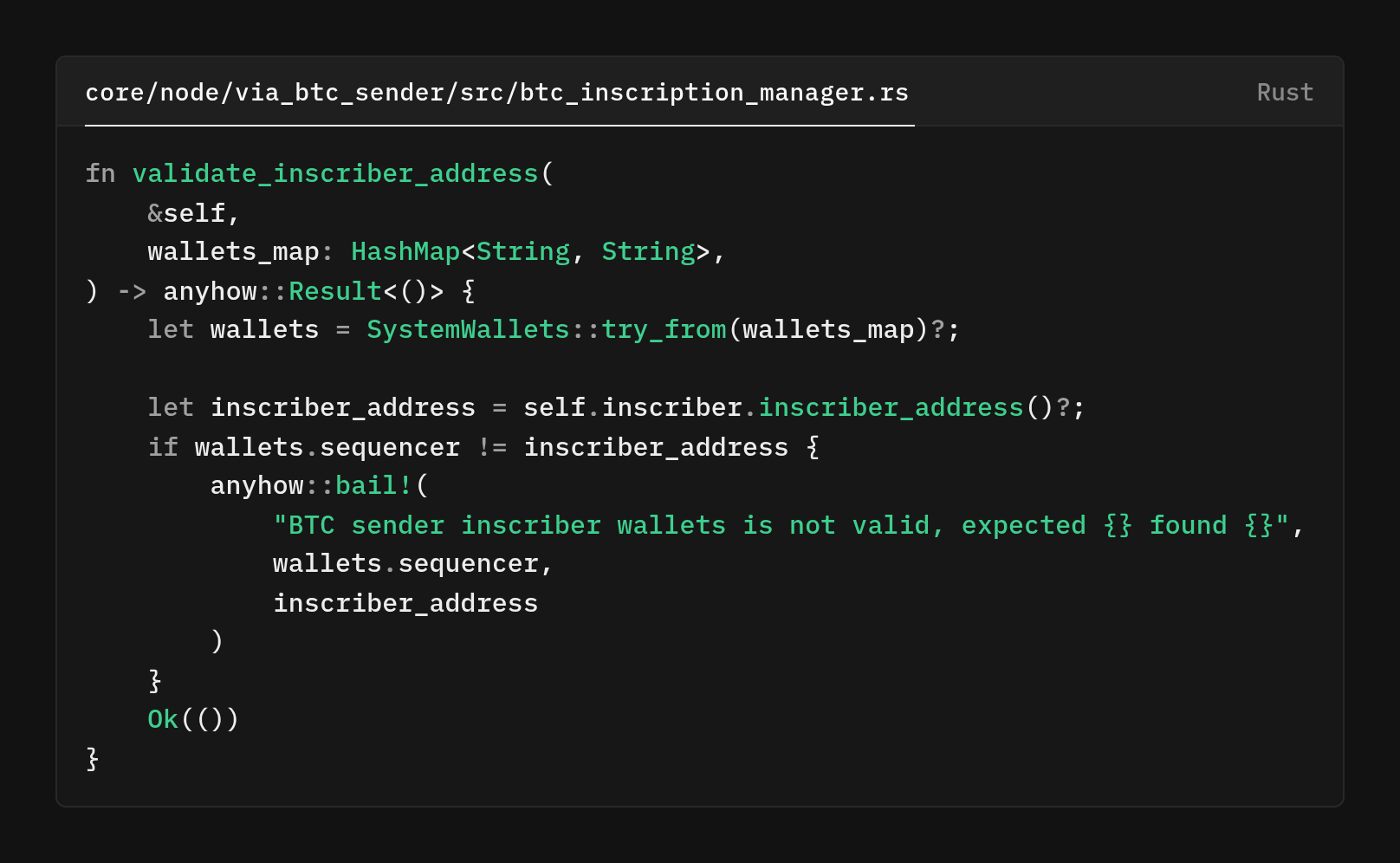

The validator ensures our BTC sender uses the canonical address for the sequencer role so that every on-chain action is attributable and verifiable.

First, load the canonical systemwallets snapshot from storage (always typed and validated). Then queries the Inscriber with inscriber.inscriber_address() to get the Bitcoin address it is configured to use right now.

Then you see wallets.sequencer != inscriber_adress(), we basically compare that address to the canonical sequencer address in wallets.sequencer. If they differ, the startup aborts with a clear error. If they match, the node proceeds.

Why would such a check even be needed, you may wonder.

Config files, environment variables, or operator setups can get out of sync. The check stops the node from accidentally writing inscriptions with the wrong key. Watchers and verifiers assume all inscriptions come from the sequencer address. If a different key is used, their validation fails, or worse, it could create ambiguity about which inscription in real

It's also a defense strategy. Even if a component is misconfigured or a key rotation wasn't propagated everywhere, the node fails fast instead of silently producing an untrusted on-chain state.

When keys or policies change, the canonical snapshot is updated first. Components only continue if they match that snapshot, which means rotations don't cause downtime or inconsistency

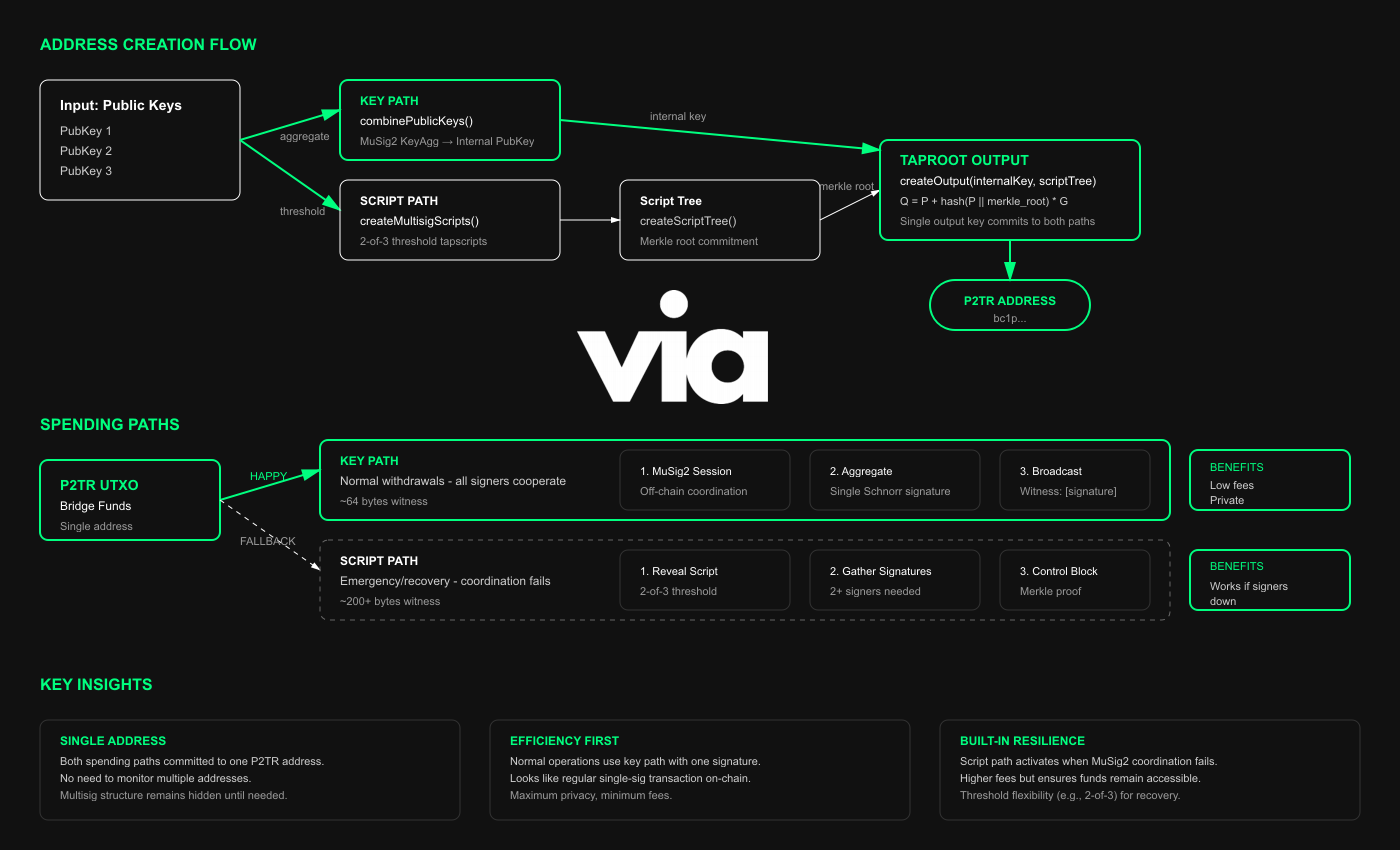

Why Taproot for the Via MuSig2 Bridge

The bridge needs one address that's cheap and private, yet stays alive if some signers are down. Taproot gives both in a single output

- Signers use a MuSig2 off-chain to produce one Schnorr signature. On-chain it looks like a single P2TR with low fees and no policy reveal

- The same output also commits to a threshold tapscript (e.g., 2-3). If coordination fails, reveal and satisfy the script on-chain. This is larger but keeps funds movable.

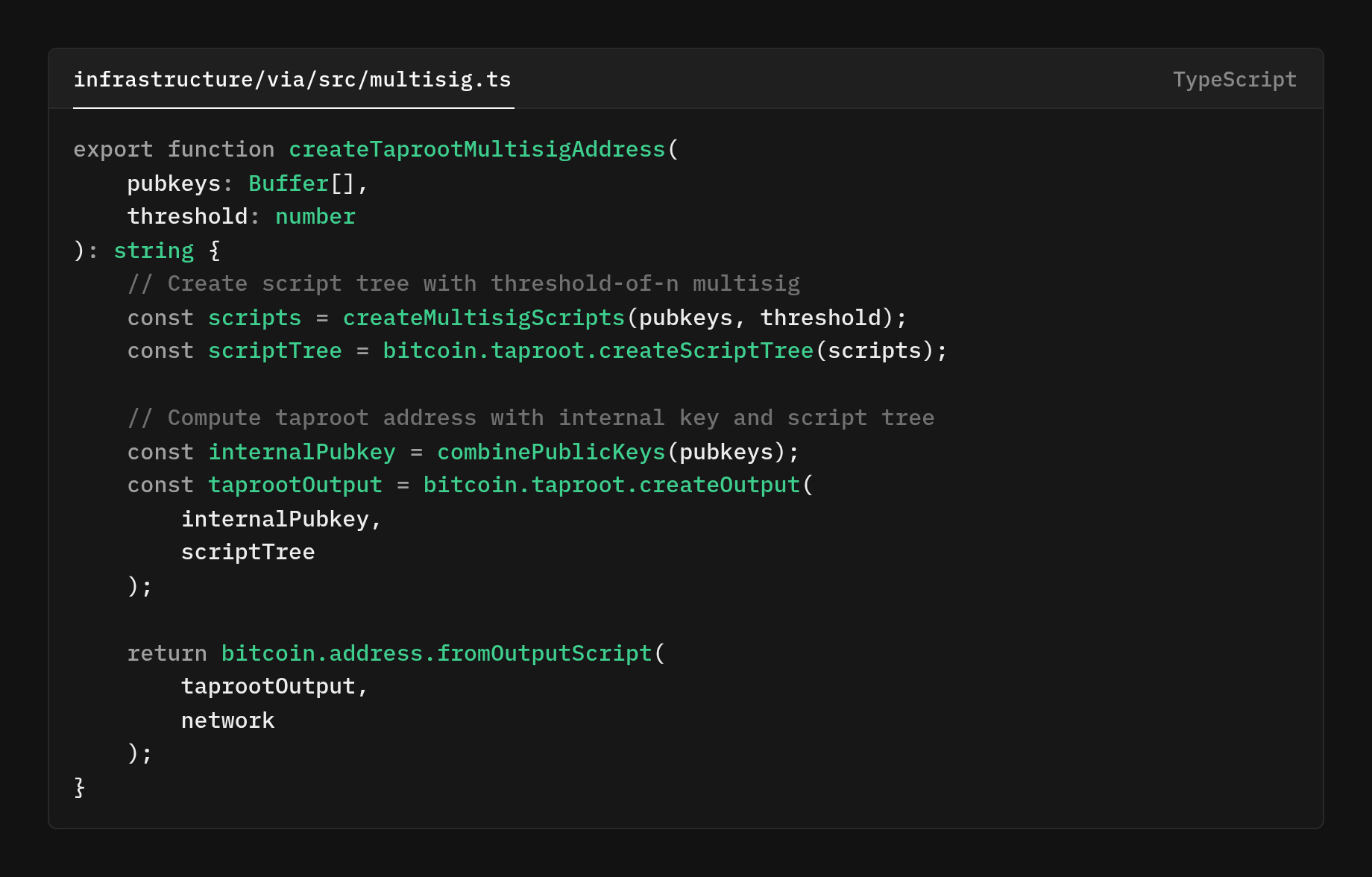

So, what does this function createTaprootMultisigAddress do?

- Build the fallback policy (tapscript leaves)

createMultisigScripts(pubkeys, threshold)Produces one or more tapscripts (e.g., a 2‑of‑3 policy).These are the script‑path options you can reveal if coordination fails. - Commit the scripts into a Taproot tree

bitcoin.taproot.createScriptTree(scripts)Arranges the leaves into a Merkle tree and yields the Merkle root that will be committed into the address.If there’s one leaf, the root is just that leaf’s hash. - Derive the internal public key (for the key path)

combinePublicKeys(pubkeys)Aggregates participant keys into a single x‑only internal key (think: MuSig2 KeyAgg).This key is used for the cooperative key‑path spend (the “looks like single‑sig” path). - Form the Taproot output key (the commitment)

bitcoin.taproot.createOutput(internalPubkey, scriptTree)Computes the Taproot tweak from the Merkle root and applies it to the internal key → the output key.The output key commits to both the internal key (key path) and the script tree (script path). - Render a human‑readable address

bitcoin.address.fromOutputScript(taprootOutput, network)Turns the output (a P2TR scriptPubKey) into a bech32m address for your network.

Taproot 2-of-3 + MuSig2 key path

The magic happens when we combine MuSig2 key path with a Taproot script path in a single P2TR address. One address, two ways to spend

- Cooperative (key path): The signers run a MuSig2 session off-chain to produce a single Schnorr signature. On-chain, it looks like single-sig. Small, cheap & private.

- Fallback (script path): the same output also commits to a threshold tapscript (e.g., 2-of-3). If coordination fails, reveal the script and spend with a quorum. A bit of a larger footprint, but robust.

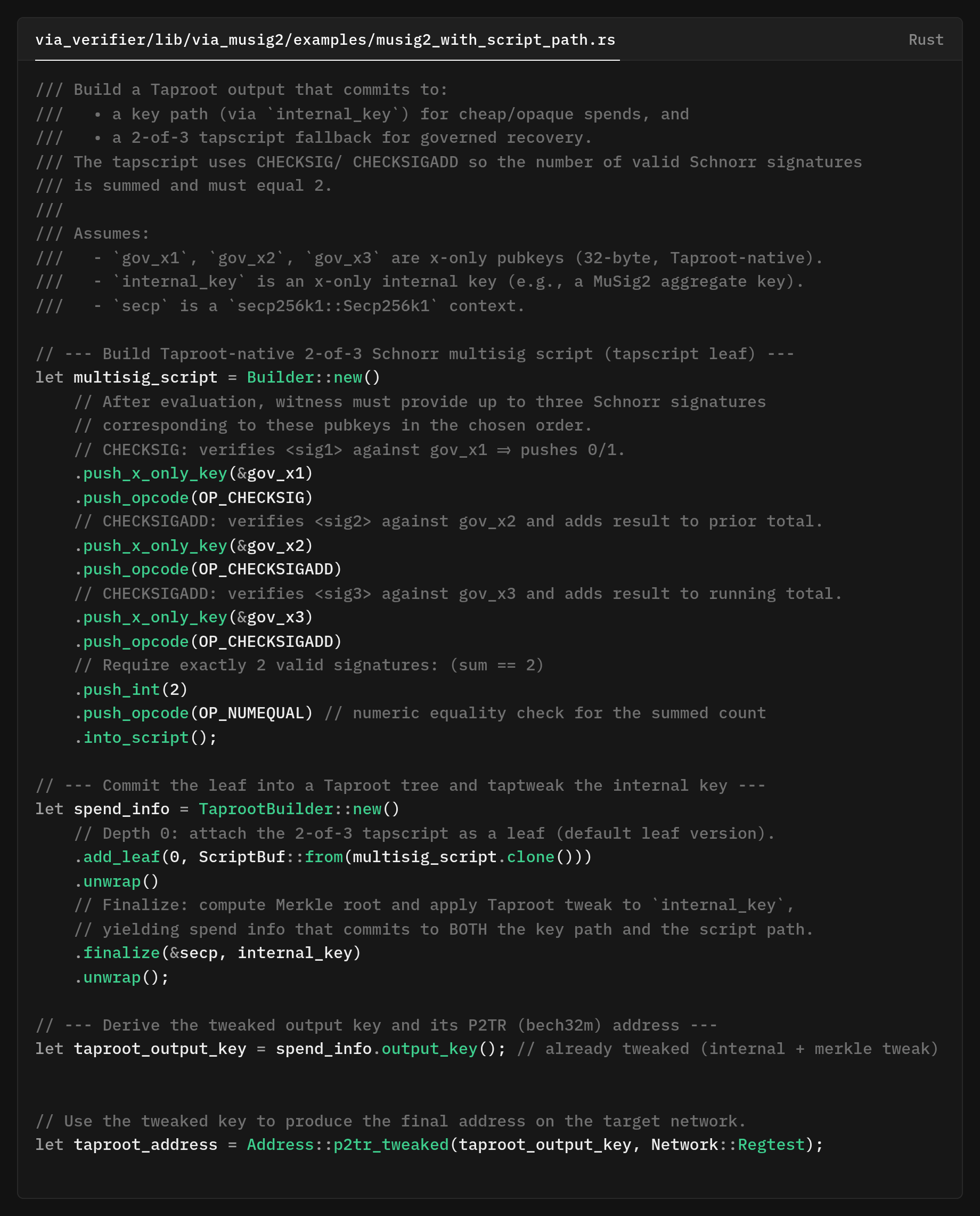

Note that this is an illustrative, oversimplified example of code. The real example code can be found here:

This code snippet creates a Taproot (P2TR) output that commits to two spend paths: a cooperative key path (MuSig2, single Schnorr sig on-chain) and a fallback script path (Taproot-native 2-of-3 threshold).

To build the threshold tapscript, the script verifies three x-only pubkeys and counts valid signatures: first via OP_CHECKSIG, then two OP_CHECKSIGADD to accumulate the total, and finally OP_NUMEQUAL to assert the sum is exactly 2. This is the Taproot-native encoding of “2-of-3” (Not only to legacy OP_CHECKMULTISIG).

- Commit the leaf into the Taproot using

TaprootBuilder::new()→.add_leaf(...)→.finalize(&secp, internal_key)

It attaches the tapscript leaf and taptweaks the MuSig2-aggregated internal key by the Merkle root, producing a tweaked output key that commits to both paths. We derive the address from the tweaked output key, Address::p2tr_tweaked(...) creates the bech32m P2TR address (here on Regtest in this example snipped).

How you spend it

In Taproot, a 2‑of‑3 threshold is represented with a tapscript that “counts” as a valid signature, checks, and compares the total to 2 (e.g., OP_NUMEQUAL).

If only 2 signers are participating, the third “slot” is an empty push (<>). That empty element contributes 0 to the sum, so you’ll often see an empty item next to the two real Schnorr signatures in the witness.

<sig_K1> <> <sig_K3> <tapscript> <control_block>

// The two non-empty signatures each add 1

// The empty adds 0. The script enforces total == 2.

This explains why you may see an empty item alongside the two actual signatures in the witness.

Provide only the aggregated MuSig2 Schnorr signature → the verifier takes the key path.

The Taproot address is like one door with two different locks behind it:

- Lock A (key path): opens with one special “joint” key (a MuSig2 signature made together by the signers).

- Lock B (script path): opens with any 2 of the 3 individual keys, plus showing the rules document (the tapscript) and a proof that the rules were committed (the control block).

The witness is just the stuff you attach to the spent input to prove you can open the lock.

Consensus and Security Requirements for Wallet Updates

We can't just update system wallets magically and expect everything to go well. We need a consensus mechanism

Before a verifier can participate, it must prove it's using the consensus-recorded identity.

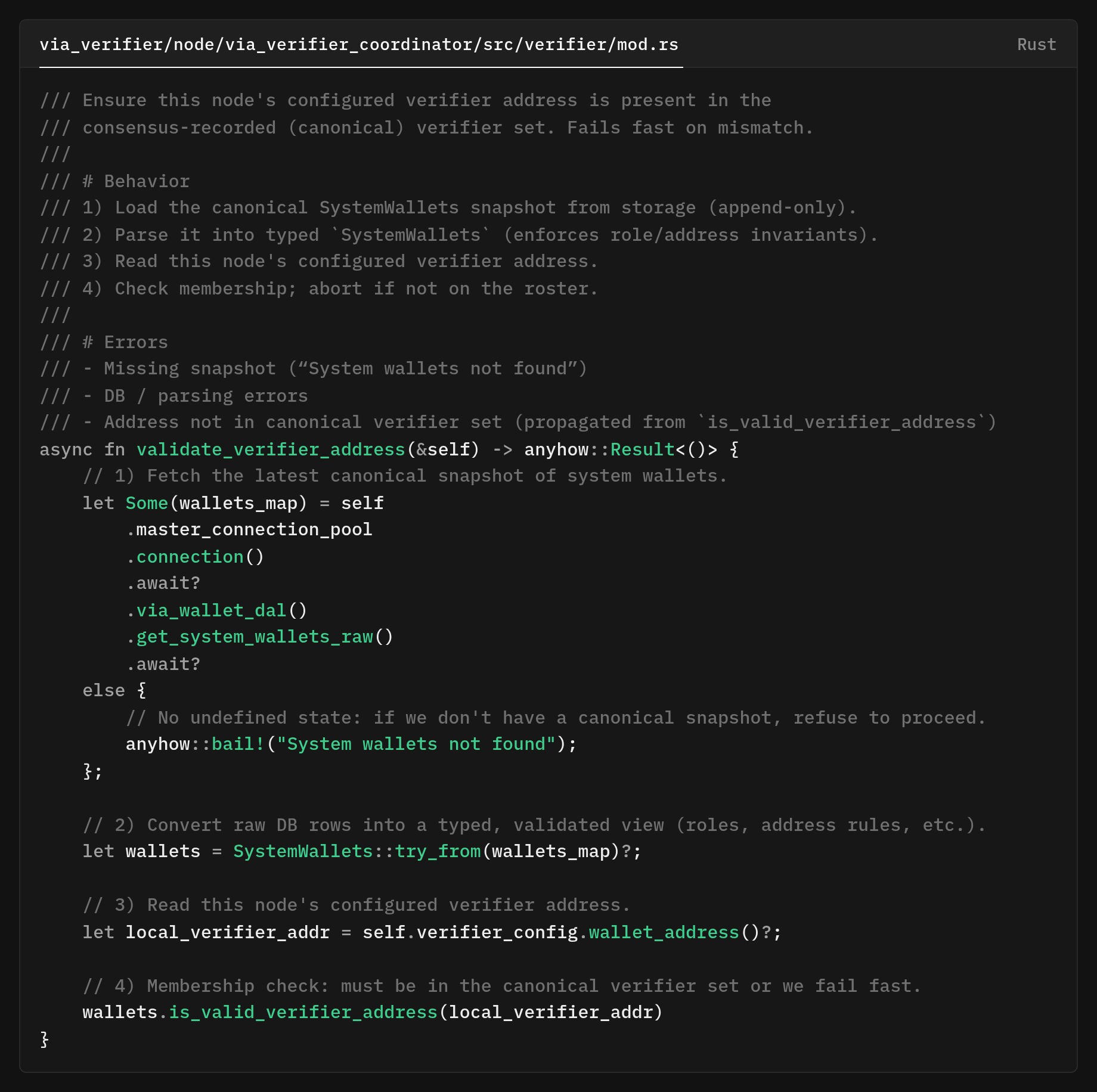

First, identify a match before participation. The verifier process will not run unless its configured Bitcoin address is present in the canonical verifier set enforced by async fn validate_verifier_address.

The snapshot comes from the consensus flow (inscribed on Bitcoin, properly signed, majority verified, TxID linked) and is stored append-only.

First, load the canonical snapshot from storage via via_wallet_dal().get_system_wallets_raw(). If missing, bail early, no undefined state.

Type/validate with SystemWallets::try_from(...) encoded role/address rules. Then check membership with is_valid_verifier_address(cfg_addr) make sure this node’s configured verifier address is on record. Proceed if it’s in the set; otherwise, abort. There's no room for ambiguous participation.

On startup, a verifier proves it’s using the correct on-chain identity by checking its configured address against the canonical SystemWallets. Mismatch? Stop immediately. Match? Proceed.[[[ NOTE: this needs some work. I have a draft, but I am not satisfied with the quality of my writing on that draft. It looks disorganized and messy]]]

Type Safety and Role Validation Rules

In patch 222 we've rolled out, we figured it is best to encode wallet policy in types, so bad configurations are rejected before any component can act. A canonical Systemwallets snapshot is built from raw storage only if each role's address matches its expected Bitcoin script type.

If any role or address pair violates policy, construction fails upfront. This ensures every node runs against the same consensus view, and the runtime can't introduce drifts or unsafe address kinds.

Address Types by System Component

-

Sequencer / Verifier →

P2WPKH(Native SegWit Single-Signature)

Operational roles prioritize low bytes, low latency, and straightforward key handling. Validation ensures these addresses decode to P2WPKH. -

Bridge →

P2TR(Taproot)

Bridge must support 2 spend paths: a cooperative MuSig2 key path and a threshold Tapscript fallback. Validation ensures the configured address decodes as P2TR, aligning with Taproot-based policies. -

Governance →

P2WSH(Native SegWit Script-Hash)

Transparent multisig policies. Validation ensures the address decodes to P2WSH so that on-chain spends reveal the policy (as intended for accountability).

Why this Approach is chosen

The only way to obtain SystemWallets is via a constructor that validates roles and their script types. If construction succeeds, we can safely assume that the policy is satisfied

We can also have safe runtime updates. Wallet changes are inscribed/approved and written append-only to storage. Nodes rebuild the typed snapshot on init, and any mismatch is caught before the node acts.

The enum defined in core/lib/types/src/via_wallet.rs is the source of truth for wallet roles. Each variant (Sequencer, Verifier, Bridge, Gov) represents a specific system function and drives branching decisions across validation logic and consuming components.

The constructor builds a SystemWallets instance from raw config (a HashMap<String, String>), performing structured validation for each role:

- Parse the raw address string into a typed address.

- Validate the network (e.g., mainnet/testnet consistency).

- Enforce the correct script type based on the role:

Sequencer,Verifier→P2WPKH(native SegWit single-sig)Bridge→P2TR(Taproot; key-path with optional script fallback)Gov→P2WSH(native SegWit multisig)

✅ If all checks pass, the typed address is stored in SystemWallets.

❌ If any check fails, the constructor returns a validation error.

At startup, each system component (e.g., verifier, bridge, governance module) performs a runtime check:

- Loads the canonical

SystemWalletssnapshot. - Verifies that its own configured address matches the expected role-specific address.

- Refuses to start if there's a mismatch (enforcing process safety and config integrity)

Invalid script types or wrong network prefixes are rejected during system boot, not at transaction time.

The use of typed addresses and role tags documents business rules and security expectations directly in logic (no manual checks)

Components operate on trusted, pre-validated addresses. No repeated address parsing or script-type checks are needed at the point of use.

type safety → process safety by enforcing role-specific rules at construction time and making misconfiguration impossible at runtime.

This new implementation prevents an entire class of misconfiguration bugs and makes runtime wallet updates safe, auditable, and deterministic.

You can review patch 221 and patch 222 completely here. These were made within the same week.

Special thanks to Idir TxFusion for being the co-author and original author of patch 221 and patch 222